Introducing LocalScore: A Local LLM Benchmark

Today, I'm excited to announce LocalScore – an open-source tool that both benchmarks how fast Large Language Models (LLMs) run on your specific hardware and serves as a repository for these results. We created LocalScore to provide a simple, portable way to evaluate computer performance across various LLMs while making it easy to share and browse hardware performance data.

We believe strongly in the power of local AI systems, especially as smaller models become more powerful. In addition, we expect computer hardware to become more powerful and cheaper for running these models. We hope this will create an opportunity for accessible and private AI systems, and that LocalScore will help you navigate this landscape.

Why We Built LocalScore

LocalScore grew out of a distributed computing network some friends and I were building together. Our little "mac mini network" became andromeda.computer and we quickly surfaced the desire to run private, always-on, local AI services. We started out with Piper STT, and soon after added small vision models (s/o moondream), Whisper, and LLMs. As we experimented, questions emerged.

What compute is appropriate for each task?

How do we run the setup cost effectively?

How can it be portable across heterogeneous hardware?

Llamafile seemed like an obvious solution for the heterogeneous hardware acceleration problem. I helped to get Whisperfile off the ground so we could have acceleration on all of our hardware. In tandem, I started writing a benchmarking tool to answer the other questions. At some point Mozilla reached out, curious about why I contributed Whisperfile, and was interested in sponsoring the community driven benchmarking project. Thus LocalScore was born, built on top of Llamafile.

What is a LocalScore?

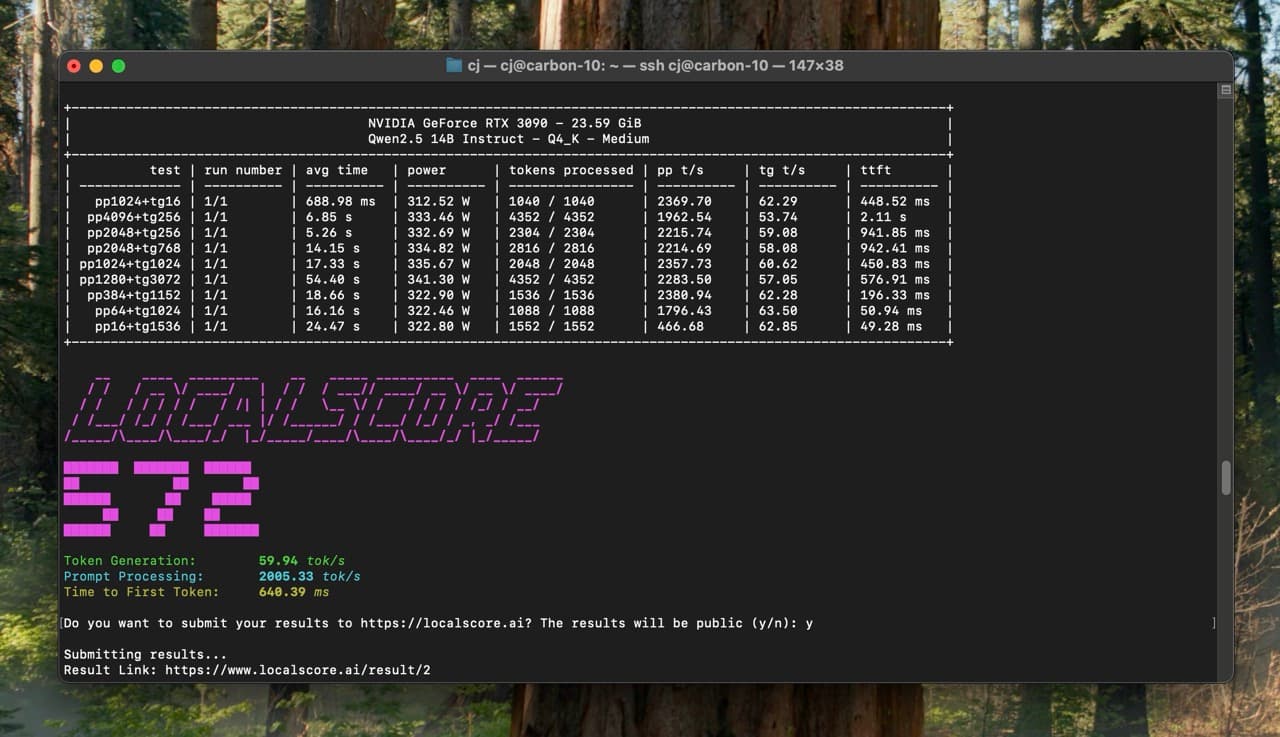

A LocalScore is a measure of three key performance metrics that matter for local LLM performance:

- Prompt Processing Speed:How quickly your system processes input text (tokens per second)

- Generation Speed:How fast your system generates new text (tokens per second)

- Time to First Token:The latency before the first response appears (milliseconds)

These metrics are combined into a single LocalScore which gives you a straightforward way to compare different hardware configurations. A score of 1,000 is excellent, 250 is passable, and below 100 will likely be a poor user experience in some regard.

Under the hood, LocalScore leverages Llamafile to ensure portability across different systems, making benchmarking accessible regardless of your setup.

A Community-Driven Project

LocalScore is 100% open source (Apache 2.0) and we'reexcited to build it with the community. We welcome contributions of all kinds, from code improvements to documentation updates to feature suggestions. For more information on how to contribute and what we are looking forward to, scroll down and check the "How to Contribute"section.

LocalScore CLI

The LocalScore CLI is built on top of Llamafile and is the first tool you can use to submit results to the LocalScore website. We hope it is the first of many. This makes running the benchmark as easy as downloading a single file and executing it on your computer. Neat.

The CLI can also be downloaded as its own executable and be used to run models which are not directly bundled with it. That means you can benchmark any .gguf model you have laying around. In addition, Llamafile includes the LocalScore benchmark and you can run it via ./llamafile --localscore.

The Tests

The tests were designed to provide a realistic picture of how models will perform in everyday use. Instead of testing raw prompt processing and generation speeds, we wanted to emulate the kinds of tasks that users will actually be doing with these models. Below is a list of the tests we run and some of the use cases they are meant to emulate.

| PROMPT TOKENS | TEXT GENERATION | SAMPLE USE CASES |

|---|---|---|

1024tokens | 16tokens | Classification, sentiment analysis, keyword extraction. |

4096tokens | 256tokens | Long document Q&A, RAG, short summary of extensive text. |

2048tokens | 256tokens | Article summarization, contextual paragraph generation. |

2048tokens | 768tokens | Drafting detailed replies, multi-paragraph generation, content sections. |

1024tokens | 1024tokens | Balanced Q&A, content drafting, code generation based on long sample. |

1280tokens | 3072tokens | Complex reasoning, chain-of-thought, long-form creative writing, code generation. |

384tokens | 1152tokens | Prompt expansion, explanation generation, creative writing, code generation. |

64tokens | 1024tokens | Short prompt creative generation (poetry/story), Q&A, code generation. |

16tokens | 1536tokens | Creative text writing/storytelling, Q&A, code generation. |

LocalScore Website

The LocalScore website is a public repository for LocalScore results. It is fairly simple for the time being, and we welcome community feedback and contributions to make it the best possible resource for the local AI community.

One of the major tensions in designing a website to serve the community is that there is an incredibly diverse set of models and computer hardware that people run. With this in mind, we chose to try and simplify the number of combinations, while still hopefully providing a useful resource.

One of our primary goals with LocalScore was to provide people with a straightforward way to assess hardware performance across various model sizes. To accomplish this, we established a set of "official benchmark models" that serve as reference points for different size categories. Currently this looks like the following:

| Tiny | Small | Medium | |

|---|---|---|---|

| # Params | 1B | 8B | 14B |

| Model Family | LLama 3.2 | LLama 3.1 | Qwen 2.5 |

| Quantization | Q4_K_M | Q4_K_M | Q4_K_M |

| Approx VRAM Required | 2GB | 6GB | 10GB |

These standardized benchmarks give you a reliable estimate of how your system will handle models within each size category, making it easier to determine which LLMs your hardware can effectively run. We are certainly interested in having community feedback on what the official benchmark models should be, and we are open to adding more models and sizes in the future.

Another goal was to allow people to submit results for any model, not just the official benchmarks. That way people can keep up with the latest trends for models, and still get a sense of how their hardware stacks up against others.

The two primary ways to view data for GPUs and models are clicking or searching the names of them on the website. When clicking on a model, you will be able to see a chart for how different accelerators perform on that model as well as a dedicated leaderboard for that model. When clicking on an accelerator, you will see a chart for how different models perform on that accelerator.

Getting Started

Using LocalScore is simple:

- Download the tool from localscore.ai/download

- Run the benchmark on your hardware

- Optionally submit your results to our public database

How to Contribute

- GitHub: The LocalScore website repository is where most development happens. Issues, discussions, and pull requests are all welcome.

- Bug Reports: Found an issue? Please report it through GitHub Issues.

- Feature Requests: Have an idea for how to improve LocalScore? Open a discussion in the GitHub repo.

- Code Contributions: Pull requests are very welcome, particularly around website features, visualizations, or UI improvements.

Scope and Focus

As with any benchmark, we need to balance comprehensiveness with practicality. While we'd love to cover every possible LLM configuration, we need to focus on common use cases that affect most users. This means:

- We can't cover every possible variation (speculative decoding, context lengths, etc.)

- We focus on single GPU setups for now as we expect this to be typical for most local users. We love enthusiasts who push the boundary of what is possible locally, but we are generally aimed at the more average case.

That said, if there's strong community interest and support for specific features, we're open to thoughtfully expanding our scope.

Looking to the Future

- Upstreaming to llama.cpp: We'd love to see these benchmarking capabilities integrated directly into llama.cpp, making them available to an even wider audience. The current codebase is built on top of Llamafile, and a lot of the current code could be upstreamed with some effort since Llamafile is built on top of llama.cpp. The CLI client is a fork of the

llama-benchprogram built into llama.cpp which was modified to be more specific and user friendly. - Third-Party Clients: We welcome developers who want to create custom clients that interface with LocalScore. If you're building a client and would like to submit results to our database, please reach out to contact@localscore.ai. We are particularly interested in GUI clients as well, and maybe upstreaming to llama.cpp will enable this.

- Public APIs: We're considering opening up APIs for the website to allow programmatic access to benchmark data. If you have specific use cases in mind, please let us know.

- Expanded Metrics: In the future, we'd like to add an efficiency score which would take into account the power consumption of the accelerator. A lot of this code is in place, but needs work to be portable across more systems before it can be shipped.

- Beyond Text: While our current focus is on text models, we're interested in eventually benchmarking:

- Vision models

- Audio models (speech-to-text and text-to-speech)

- Diffusion models

Join Us

LocalScore is a Mozilla Builders project created to serve the local AI community. We believe in the power of running AI locally, and we want to make that experience as smooth as possible for everyone.

Your feedback and contributions are not just appreciated—they'reessential to making LocalScore a truly valuable community resource. Visit localscore.ai to try the benchmark, explore results, and join our community.